Changing How I Use AI: All Models from One Interface (Without Subscriptions)

- Jul 22, 2025

- 4 min read

Updated: Aug 13, 2025

Why I Stopped Paying for ChatGPT Plus, Claude, and Others

If you've ever found yourself with five AI chat tabs open simultaneously, switching between them and copying responses like ping-pong, you're not alone. When you add up several monthly subscriptions, you suddenly realize you're paying dozens of dollars for features you only use partially.

What if There Was One Interface for All AI Models?

Imagine having one interface that you can run locally or for your team. You could set your own budgets, users, and access controls.

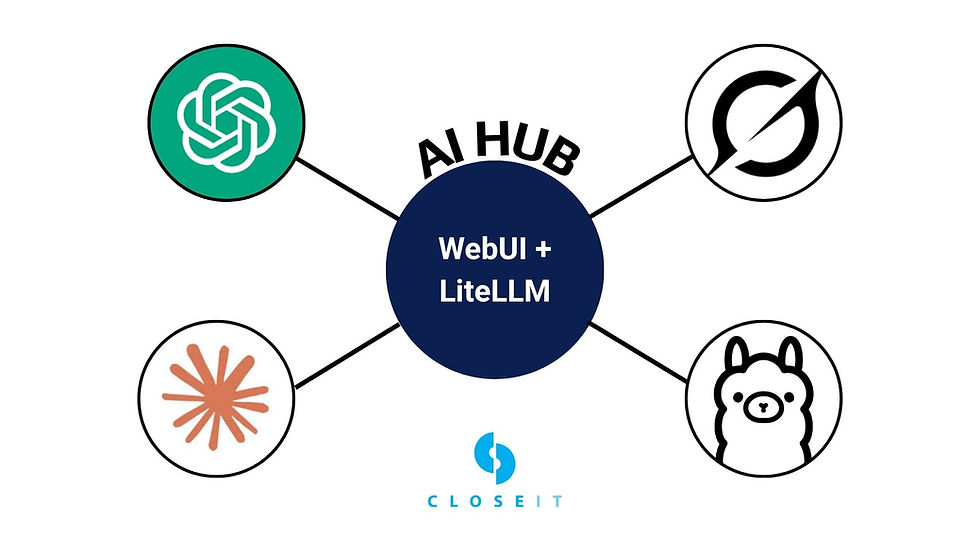

This isn't sci-fi. It's called Open WebUI + LiteLLM Proxy, and it might be the smartest way to access AI today.

What is Open WebUI + LiteLLM Proxy and Why I'm Excited About It

Open WebUI is an open-source interface that functions like ChatGPT. However, it has one significant difference: you can connect it to all major AI providers (OpenAI, Anthropic, Google) or even local models like Llama 3. This creates a central AI hub accessible through the web.

LiteLLM is a middleware proxy that translates Open WebUI requests to individual APIs. Simply put, you connect LiteLLM, add API keys from various providers, and you're done.

Advantages That Convinced Me

✅ Pay Only for What You Actually Use

No flat fees. You pay based on actual usage. You can set limits for users—kids might have a monthly limit of $20, while colleagues could have $50. You can enjoy unlimited access.

In my case:

Before: $60/month for subscriptions

Now: $15-25/month based on usage

✅ Access to All Models – From One Place

Claude 3.5, GPT-4o, Gemini, Grok, and even local Llama 3? You can have them side by side in the same interface. Plus, you see the entire conversation in one place, saving time and mental capacity.

✅ Full Control Over Your Data

Your history and conversations remain on your device. They're not stored on OpenAI or Google servers. This is crucial for security and privacy.

✅ Team or Family Management Capability

You can create accounts for kids or colleagues, set rules, and monitor usage. Add a system prompt like "don't help with cheating," and you can ensure AI tools are used properly.

When It Does (and Doesn't) Make Sense

This combination isn't ideal for everyone. Watch out for these cases:

❌ When It's NOT Worth It:

Are you a power user of one model?

If you intensively use just GPT-4o or Claude daily and generate long texts, you can easily exceed $20-30 monthly. In that case, a classic subscription is more advantageous.

✅ When It's the PERFECT Solution:

Do you use AI only occasionally?

For occasional use, this is ideal.

Do you use multiple models?

This is the golden scenario. For example:

Claude for writing (15-20 queries monthly)

GPT-4o for analysis (15-20 queries monthly)

Gemini for research (15-20 queries monthly)

Local Llama for experiments

Instead of 3× subscriptions ($60/month), you pay based on actual usage—maybe just $8-25.

Do you want control and flexibility?

For teams, developers, or parents wanting AI under control, this is ideal. You can set limits, monitor usage, and keep data on your device.

Are you experimenting with different models?

If you're testing what each model does best, you save not only money but also time switching between interfaces.

How to Install It in 5 Minutes (on MacBook)

Install Docker: Docker: Accelerated Container Application Development

Open WebUI:

docker run -d -p 3000:8080 -v ${HOME}/.open-webui:/app/backend/data --restart always --name open-webui ghcr.io/open-webui/open-webui:mainLiteLLM Proxy:

git clone https://github.com/BerriAI/litellm

cd litellm

docker-compose up -dAdd API keys (e.g., from OpenAI) in LiteLLM UI (localhost:4000)

Connect LiteLLM with Open WebUI as a proxy

If you want to run local models too, use a tool like LM Studio or Ollama.

Conclusion: Why I Wouldn't Change Back

I gained access to all models without flat fees. I have control over my data. I share access with family and colleagues. And I pay less—at least fairly based on usage.

If it makes sense to you to have AI centralized, secure, and under your management, try it yourself. If you'd like a detailed guide on cloud deployment for teams, write in the comments. I'd be happy to prepare another installment.

❓ FAQ – Frequently Asked Questions

What's the main difference compared to ChatGPT?

You have access to all models (ChatGPT, Claude, Gemini, local) from one interface and pay only for actual usage instead of a fixed subscription.

Is it really cheaper than subscriptions?

It depends on usage patterns. If you use multiple models occasionally, you save 50-80%. If you're a power user of one model, a classic subscription might be more advantageous.

How secure are my data and conversations?

Conversation history stays locally on your device. Only current queries are sent via API, not the entire conversation history like with web interfaces.

Can I share Open WebUI with my team or family?

Yes, you can create multiple user accounts, set individual monthly limits, and monitor usage. This is ideal for managing AI in teams and families.

Is the technical installation complex?

Basic setup on your own computer takes 5-10 minutes. If you can run a Docker container, you can handle it without problems.

Which AI models can I connect?

All major ones: OpenAI (GPT-4o, GPT-3.5), Anthropic (Claude 3.5), Google (Gemini), plus local models like Llama, Mistral, and others via Ollama or LM Studio.

How much does it actually cost in practice?

In my case, an average of $18/month ($450 CZK) instead of the original $60 ($1440 CZK) for three subscriptions. It depends on usage intensity.

.png)

Comments